Extract, Transform, Load (ETL) Craigslist Web Scraper

Goal: To curate a dataset of math tutoring prices that can be used to understand the local and national competition.

Python, PostgreSQL, HTML, RegExWeb Scraping, Data Cleaning

Background

As someone who runs a tutoring business where a large portion of its business comes from Craigslist , I wanted to understand what competing tutors were charging nearby and in other parts of the country. Using Python, I built this web scraper to extract the prices of tutors from posts found in Craigslist's services section.The full project can be found on my GitHub.

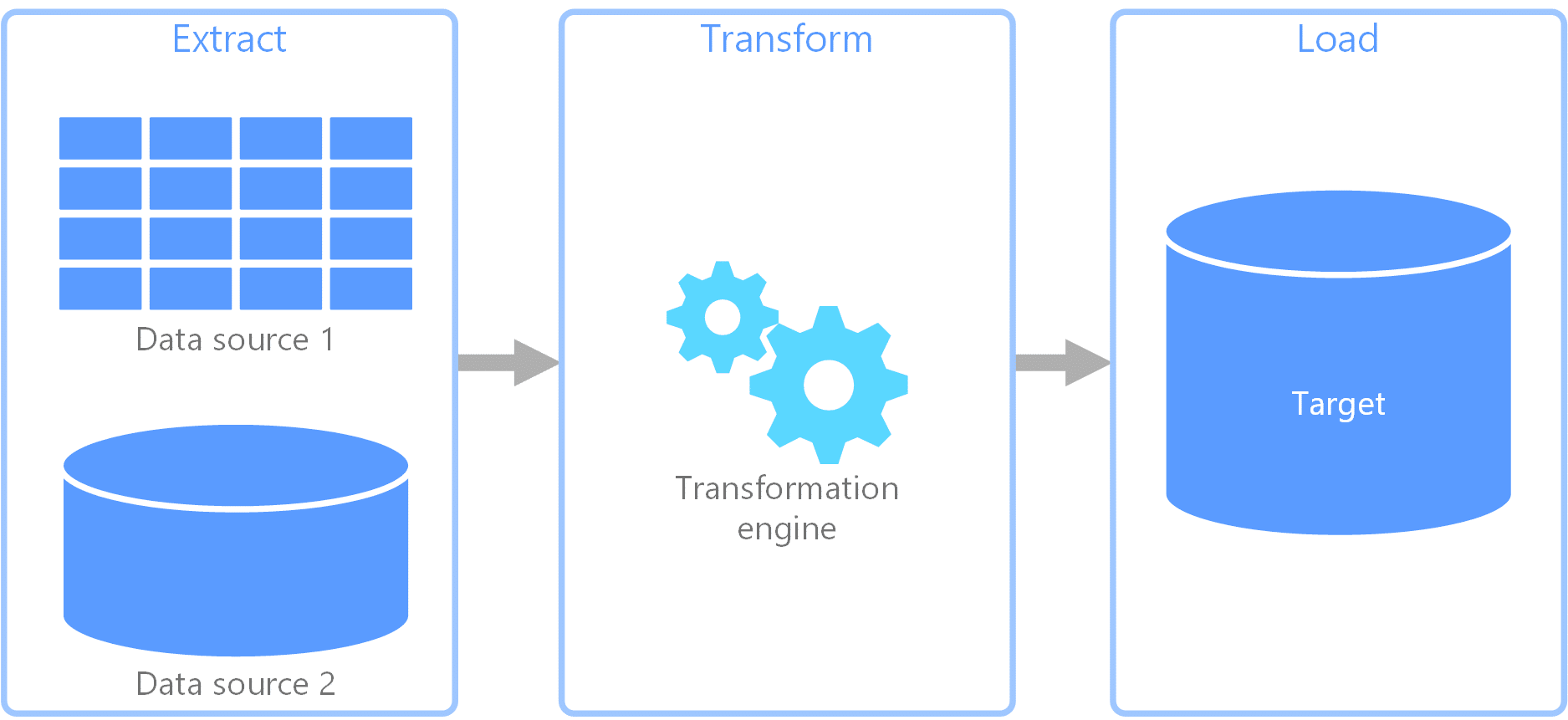

The process:

- Extract posting information from Craigslist.

- Transform the data to my own specifications.

- Load the data into a PostgreSQL database.

Part 1: Extract

Pandas, Requests and BeautifulSoupTo begin, I created functions that would perform various tasks necessary to scrape posting information for Craigslist.

-

get_state_and_region_tags():

- Extracts the state and region names for all geographic areas.

- Ex: California - Sacramento, California - SFbayarea, Florida - Miami

-

get_state_to_region_dict():

- Uses state and region names to construct a dictionary containing URLs for each unique state and region pair.

- Ex: sacramento.craigslist.org, sfbayarea.craigslist.org, miami.craigslist.org

-

get_region_search_page_urls():

- Initiate an HTTP request to each URL and perform a search for "math tutor" in the services section of each region. Extracts the HTML of the search results.

-

get_urls_of_posts():

- Crawls the HTML of the search results page, extracting the URL of each posting contained within.

-

convert_urls_to_soup_objs():

- Extracts and parses the HTML code from every posting using BeautfulSoup.

Part 2: Transform

Pandas, NumPy, and SklearnNext, I began inspecting the posts to determine the best way to extract the information I wanted.

2.1 Drop duplicate posts

I was surprised to find that, although I had scraped 4,720 postings nationwide, only 1,257 of them were unique. I discovered that many math tutors place ads in multiple geographic locations, hence why there were so many duplicate postings.

Investigating further, I found some tutors change the wording of their posts, making detection difficult. Instead of removing posts with text that matches character for character, I had to get creative. In the end, I solved the problem using vector embeddings and something known as the cosine similarity ratio. By calculating the ratio between each pair of postings, I could identify when a post was "similar enough" to another posting and mark it as a duplicate for subsequent removal. After removing these posts, only 685 remained, or about 15.41% of the posts that were originally scraped.

2.2 Determining the hourly rate

Reading through some of the postings showed they can be fairly different from tutor to tutor. One post may have no pricing information, while another post can have a single flat hourly rate, and others can have more complicated pricing schedules that change based on the length of a tutoring session (ex: $30 for 1 hr, $50 for 2 hrs), or a multitude of other factors like the subject tutored (ex: algebra vs. calculus).

My plan for extracting the different prices depended on how complicated the pricing schedule was. First, I used RegEx to identify any postings without any pricing information and excluded them from the analysis. This brought the number of postings down to 300. From there, any posts that had a single hourly rate were kept, and I used RegEx to extract the pricing information. However, the implementation for handling posts with two or more prices is as follows:

- If two prices were mentioned in a post, extract them using RegEx and average them.

- If three or more prices are mentioned, flag the post, then manually inspect and determine the price later.

Part 3: Load

Pandas and Psycopg2After transforming the data, it gets loaded into a Pandas DataFrame, exported to an Excel CSV file, and then Psycopg2 inserts rows from the CSV file into a local PostgreSQL database.

The analysis of the data that was collected can be found here.